Transfer and primacy Bias

Is negative transfer in DRL related to primacy bias?

Course of study:

Kind of thesis:

Programming languages:

Keywords:

Problem:

Deep Reinforcement Learning (DRL) combines deep learning and reinforcement learning to enable AI agents to learn from raw input data and make decisions. DRL uses neural networks to approximate complex policies and value functions. It has achieved breakthroughs in tasks like game playing, robotics, and complex decision-making.Primacy bias is a tendency that DRL algorithms have to rely on early interactions and ignore useful evidence encountered later. Because of training on progressively growing datasets, deep RL agents incur a risk of overfitting to earlier experiences, negatively affecting the rest of the learning process [1].

Transfer Learning (TL) is an efficient machine learning paradigm that allows overcoming some of the hurdles that characterize the successful training of deep neural networks, ranging from long training times to the needs of large datasets. Transferring neural networks in a DRL context can be particularly challenging and is a process which in most cases results in negative transfer [2].

It may be the case that negative transfer in TL is related to primacy bias.

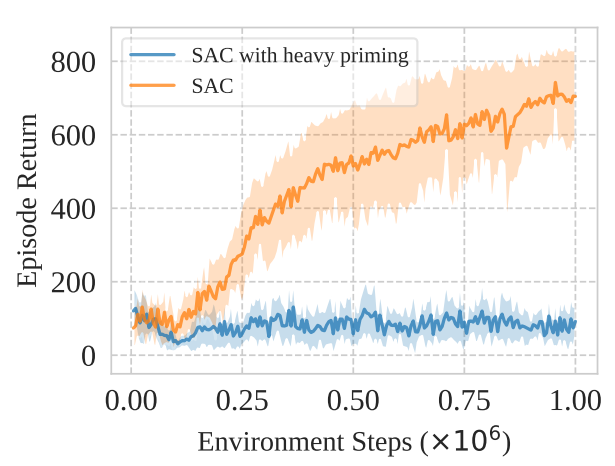

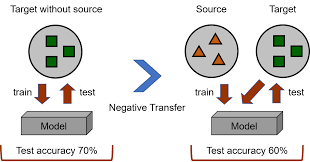

In the top figure, an undiscounted return on quadruped-run for SAC with and without heavy priming on the first 100 transitions. An agent affected by the primacy bias is unable to learn [1]. In the bottom figure, a negative transfer scheme showing that you can have lower accuracy after transferring source information to train with the target.

Goal:

Identify if negative transfer is related to primacy bias in finite environments.

Preliminary work:

There are works made analysing the problem of negative transfer, and primacy bias, but to our knowledge, there is no investigation if the two phenomena are related.

Tasks:

This project can include

The final tasks will be discussed with the supervisor. Please feel free to get in contact.

References

Supervision

Supervisor: Rafael Fernandes Cunha

Room: 5161.0438 (Bernoulliborg)

Email: r.f.cunha@rug.nl

Co-supervisor: Matthia Sabatelli

Email: m.sabatelli@rug.nl