Transfer in multi-objective environments

Transfer learning using successor features interpreted as a multi-objectve problem

Course of study:

Kind of thesis:

Programming languages:

Keywords:

Problem:

The combination of reinforcement learning (RL) with deep learning is a promising approach to tackle important sequential decisionmaking problems that are currently intractable. One obstacle to overcome is the amount of data needed by learning systems of this type. Complex decision problems can be naturally decomposed into multiple tasks that unfold in sequence or in parallel. By associating each task with a reward function, this problem decomposition can be seamlessly accommodated within the standard reinforcement-learning formalism. If the reward function of a task can be well approximated as a linear combination of the reward functions of tasks previously solved, we can reduce a reinforcement-learning problem to a simpler linear regression [1].

Successor features (SF) is a value function representation that decouples the dynamics of the environment from the rewards, and generalized policy improvement (GPI) is a generalization of dynamic programming’s policy improvement operation that considers a set of policies rather than a single one. Put together, the two ideas lead to an approach that integrates seamlessly within the RL framework and allows the free exchange of information across tasks [3].

If reward functions are expressed linearly, and the agent has previously learned a set of policies for different tasks, successor features (SFs) can be exploited to combine such policies and identify reasonable solutions for new problems [2]. The paper [2] allows RL agents to combine existing policies and directly identify optimal policies for arbitrary new problems, without requiring any further interactions with the environment. It shows that the transfer learning problem tackled by SFs is equivalent to the problem of learning to optimize multiple objectives in RL.

We would like to investigate the relation between SF and multi-objective problems further, understanding the minimum set of policies that can deliver reasonable performance for different types of environments. In other words, empirically gain an intuition of what characteristics of different environments impact more on the size of this set of policies.

Successor features (SF) is a value function representation that decouples the dynamics of the environment from the rewards, and generalized policy improvement (GPI) is a generalization of dynamic programming’s policy improvement operation that considers a set of policies rather than a single one. Put together, the two ideas lead to an approach that integrates seamlessly within the RL framework and allows the free exchange of information across tasks [3].

If reward functions are expressed linearly, and the agent has previously learned a set of policies for different tasks, successor features (SFs) can be exploited to combine such policies and identify reasonable solutions for new problems [2]. The paper [2] allows RL agents to combine existing policies and directly identify optimal policies for arbitrary new problems, without requiring any further interactions with the environment. It shows that the transfer learning problem tackled by SFs is equivalent to the problem of learning to optimize multiple objectives in RL.

We would like to investigate the relation between SF and multi-objective problems further, understanding the minimum set of policies that can deliver reasonable performance for different types of environments. In other words, empirically gain an intuition of what characteristics of different environments impact more on the size of this set of policies.

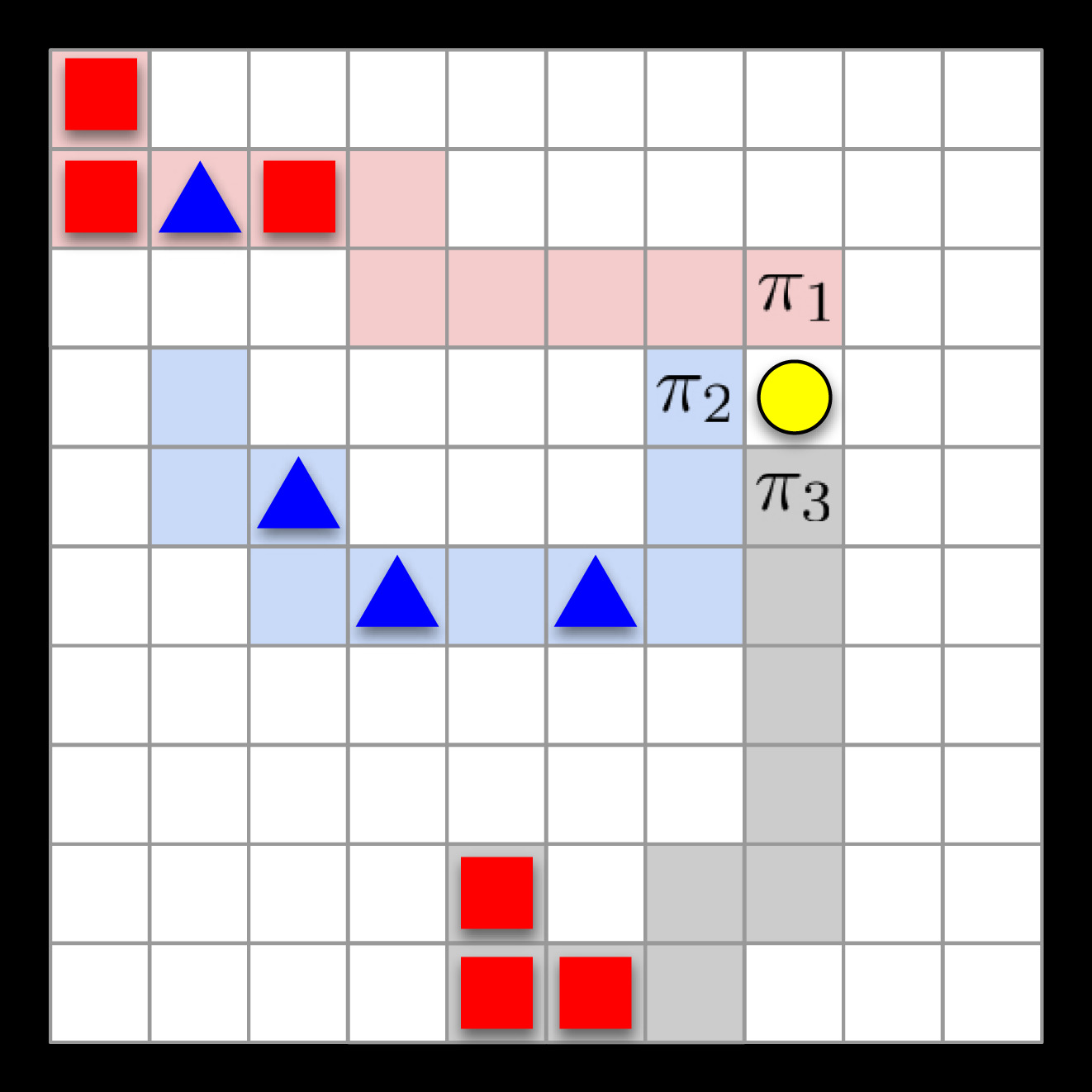

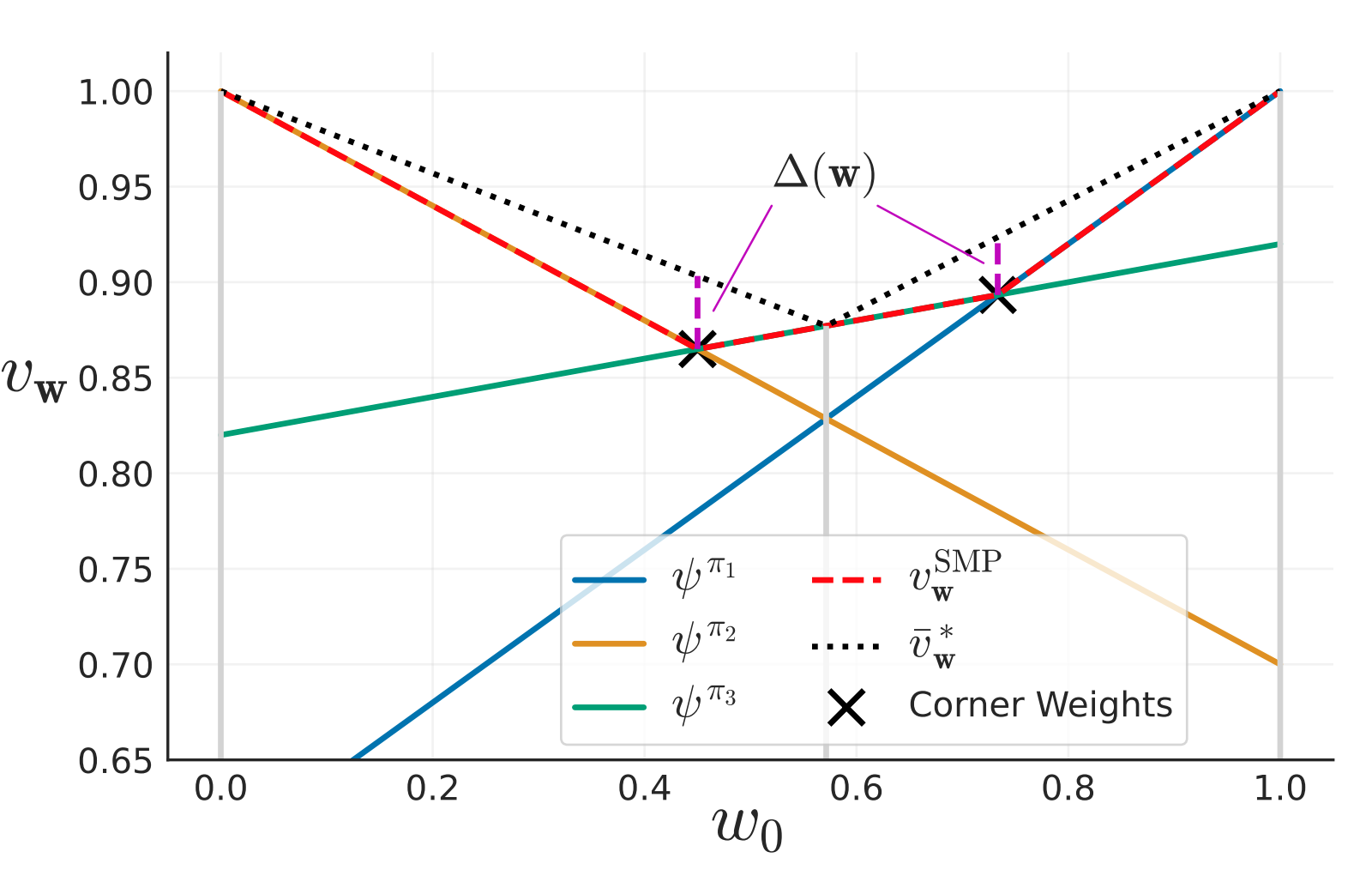

In the top figure, an agent must choose the path that gives more return depending on rewards given for collect triangles or squares [1]. In the bottom figure, a schematic representation of the algorithm that suggests how to add new policies to the set of optimum policies by solving different tasks.

Goal:

Identify empirically the connection of the size of the set of policies capable of delivering a reasonable performance when using SF and GPI with the characteristics of different multi-objective RL environments from the MO-Gymnasium library [4].

Preliminary work:

[1] investigates how to do transfer learning using SF and GPI, and the work of [2] focuses on the algorithm to find the set of policies to deliver the optimum solution when using SF and GPI.

Tasks:

This project can include

The final tasks will be discussed with the supervisor. Please feel free to get in contact.

References

Supervision

Supervisor: Rafael Fernandes Cunha

Room: 5161.0438 (Bernoulliborg)

Email: r.f.cunha@rug.nl