Faithfulness of explanations in deep reinforcement learning

Are input attribution methods applied to deep reinforcement learning agents faithful to the policy learned?

Course of study:

Kind of thesis:

Programming languages:

Keywords:

Problem:

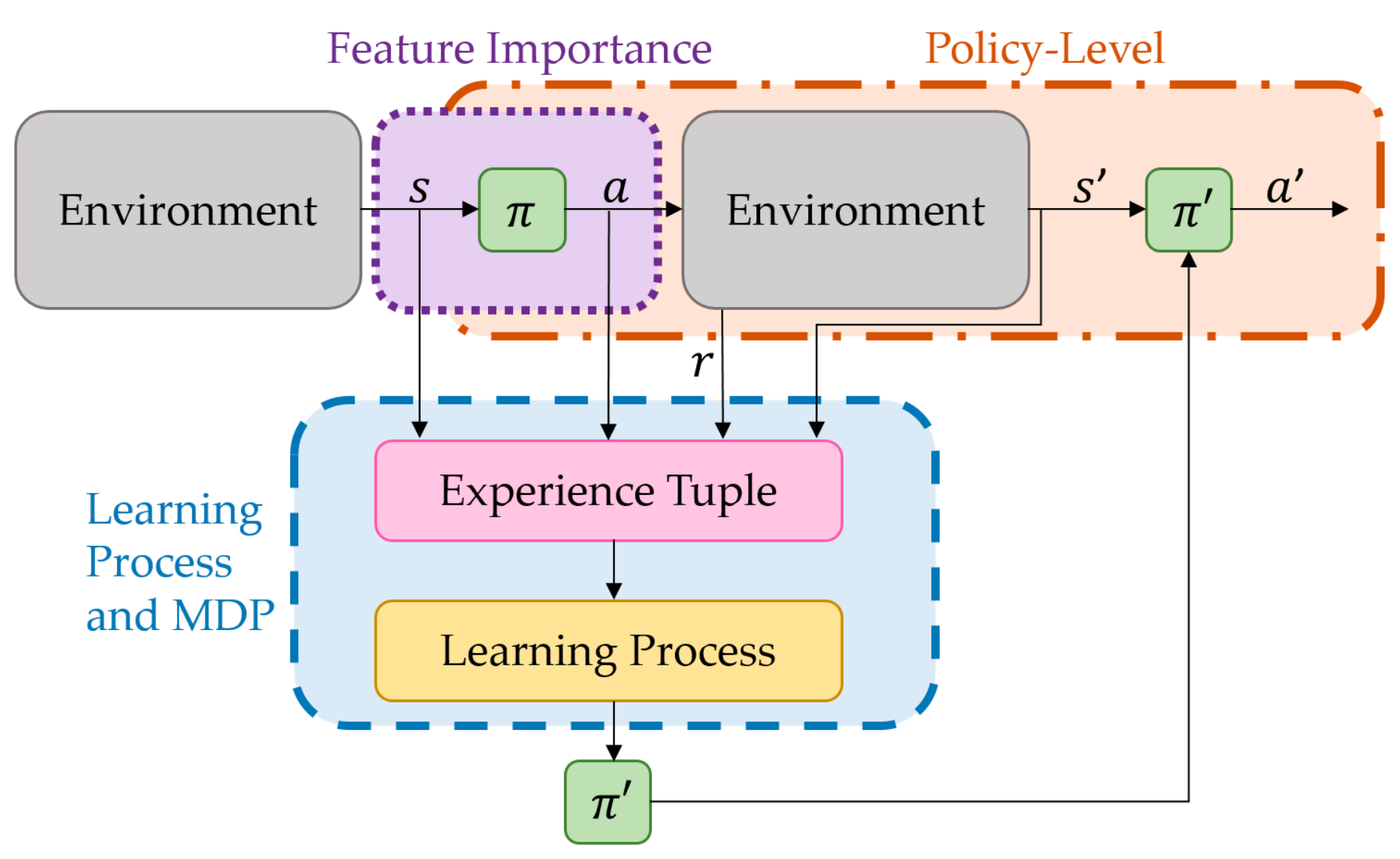

NNs can also be employed as controllers of Reinforcement Learning (RL)-based agents, whereas the model is used to learn the best policy for navigating an environment in a given state. In this sense, IA methods, or any other explainability technique, can be a great tool for getting intuitions about the reasons why an agent executes a specific action. The field that looks for explainability in RL agent behaviors is called explainable RL (XRL). The goal of XRL is to elucidate the decision-making process of learning agents in sequential decision-making settings.

One of the most pressing challenges of XAI is connected to the assessment of the quality of the explanations provided: in fact, many XAI tools are mere approximations of the underlying decision process operated by the NN, and the explanations can be widely inaccurate in that regard. A straightforward way of evaluating the explanations is to consider the faithfulness of the explanation: for instance, we could ask ourselves whether the input parts identified via IA are actually important for the NN—by perturbing or masking these areas, one reasonably expects the action of the underlying RL agent to change.

Goal:

Are input attribution methods applied to deep reinforcement learning agents faithful to the policy learned?

Preliminary work:

In [1], you can find an introductory course on XAI. [2] offers a comprehensive introduction to the evaluation of XAI techniques. [3] provides an example where input attribution methods exhibit low faithfulness, and [4] presents a literature review of some XRL methods.

Tasks:

This project can include

The final tasks will be discussed with the supervisor. Please feel free to get in contact.

References

Supervision

Supervisor: Rafael Fernandes Cunha

Room: 5161.0438 (Bernoulliborg)

Email: r.f.cunha@rug.nl

Supervisor: Marco Zullich

Room: 5161.0438 (Bernoulliborg)

Email: m.zullich@rug.nl